Zombie Walk: Turning an Image into a 3D Model with DeepMotion

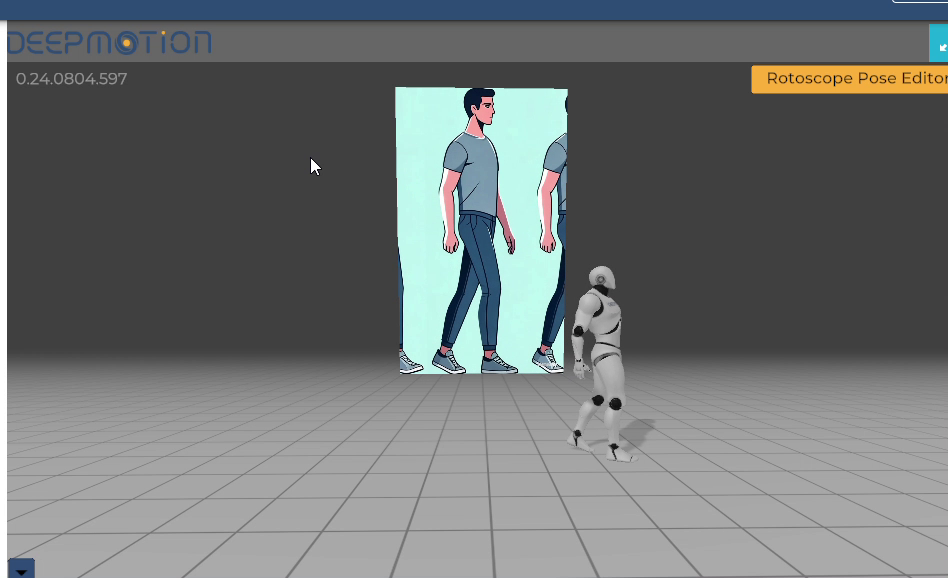

I recently ran a little experiment to animate a character walking, starting from a single image. Why does this matter? Well, I was chatting with a game developer friend who mentioned that creating characters—especially their walk cycles—is one of the toughest challenges in game development. That sparked an idea: if I could use AI to streamline this process, it might speed up game development or even offer a new solution. Here’s a quick glimpse of the end result with the 3D model mimicking the pose of a 2D character.

And so, my journey began.

The process

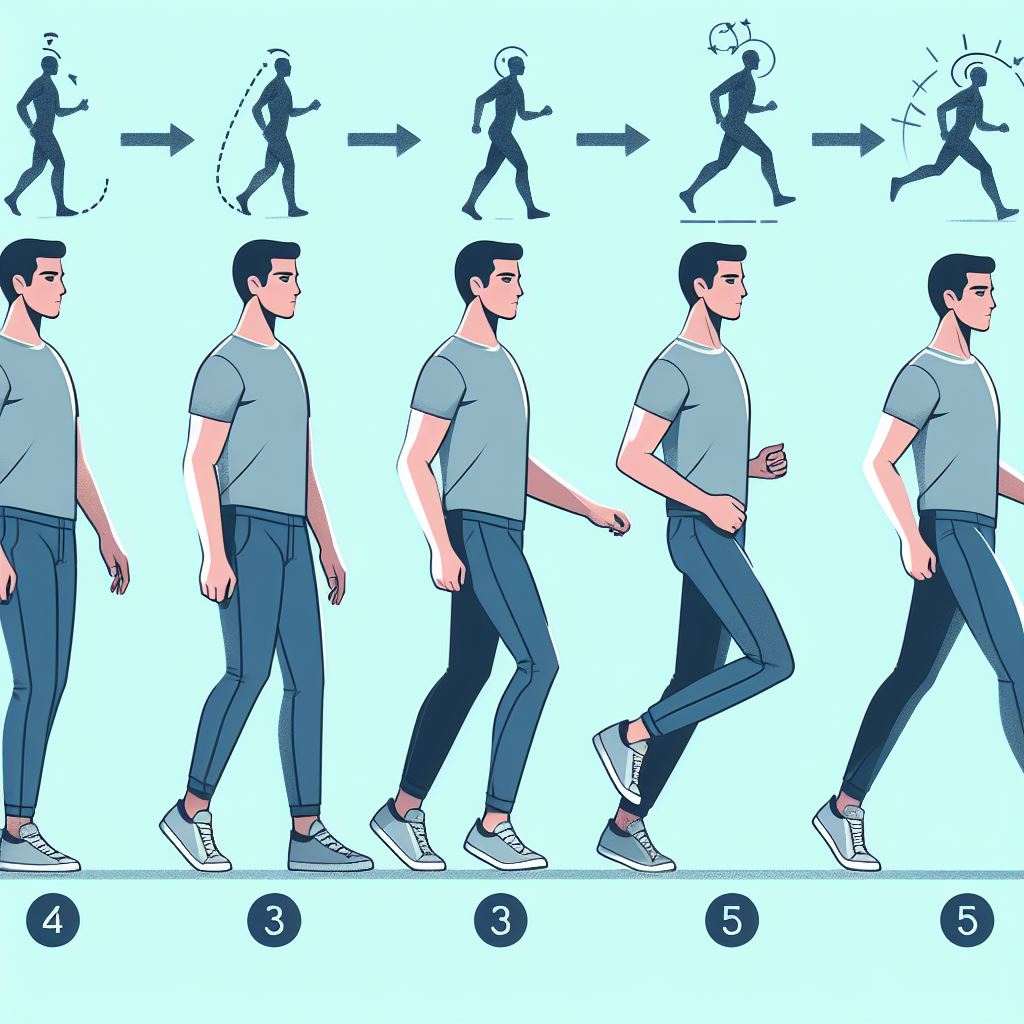

First, I used Bing Image Generator to create an image of a character in various stages of a walk cycle—stopping, stepping with the left foot, stepping with the right, and mid-stride. The results were surprisingly solid, as you can see below.

Next, I took that image into RunwayML to animate it into a video. The output wasn’t perfect, but it was decent enough to work with. Here’s the video output from RunwayML. It’s not flawless, but I’ll take it:

The real challenge came when I needed to convert this into a game-ready 3D format. I needed a tool that could translate the motion from the video into a 3D model, something I could export into a game engine like Unity or Unreal Engine.

That’s when I discovered DeepMotion, an AI tool designed to generate 3D models from text, images, or videos.

With their ‘Animate 3D’ video to 3D animation model I gave it a shot with my animated walk cycle, and the result? Well, my 3D character moves more like a zombie than a polished game character. It’s not ideal, but I’m calling it a win for a first attempt.

The final output:

Below is the video of the robot 3D model from DeepMotion, showing how the walking motion transferred from the video to the 3D character in their browser studio. I also tested this workflow with another image—a robot I’d generated earlier with Bing Image Generator. DeepMotion handled this one better, and I’ve included it in the end of the video so you can check out the results for yourself:

Some feedback I got suggested it moves like a zombie—and honestly, I can’t disagree. It’s more undead than lifelike, but here’s the output for you to judge:

Deep motion allows the final output to be exported in the following formats: FBX, BVH, GLB & MP4. This can be integrated into existing game engines for quick prototyping. The entire workflow can be described in 4 steps as:

Text (prompt for image generation)- Image- Video (animated from image) -3D model

It is worth mentioning that deep motion has a text to 3D model (haven’t tested this personally) that would simplify this workflow from 4 to 2 steps.

Text- 3D model

While this process—going from images to video to 3D models—isn’t perfect yet, I think it’s a promising start. As AI tools improve, I’m hopeful this could lead to better outcomes in the future. There are other 3D animation and AI studio tools out there too, and I’m excited to experiment with them. If I can find one that works even better with videos, it might just speed up my game development process—or at least help out some game devs along the way.

PS: Music used in the video is from French Fuse ft. Onset Music Group - Maphupho Fezeka 🇿🇦